Bagging

Bagging方法属于ensemble类的方法,表示 Bootstrap AGGregetING. Bagging方法由bootstrap和aggregation两部分组成.

Method

Bootstrapping. Given a training set $D=\left\{\left(\mathbf{x}_{1}, y_{1}\right), \ldots\left(\mathbf{x}_{n}, y_{n}\right)\right\}$, we first sample (with replacement) $T$ sets of $n$ elements from $D$, forming $T$ quasi replica training sets $\{D_{1}, D_{2}, \ldots D_{T}\}$. Then we train a machine $f_i$ on each small dataset $D_i, \space i=1,\cdots, T$. Bootstrapping中最需要理解的关键点是 sample with replacement过程。需要注意的是每个dataset包含的数据量都是和原本的dataset相同的,得到的数据集是可以存在重复元素的数据集. 而并不是从大数据集中分割出小数据集。这么做的意义是为了保证每个子数据集中的元素都满足i.i.d条件,从而达到降低variance的效果。

Aggregation. Aggregation阶段我们将在小数据集上训练的base learner $\{f_i\}_{i=1}^{T}$ 进行某种方式的聚合.

For regression:

For classification, we can do average and decide or the majority voting:

Theoretical Guarantees

As for the question why it helps improve the performance compared with the learner which is trained on the whole dataset, we need to use the formal form of the EPE (recall my blog of the bias-variance decomposition).

If we are able to access the input distribution, we can sample infinite number of independent datasets of size $n$: $\mathcal D=\{D_i\}_{i=1}^\infty$ and average the learners to reduce the variance. Then the variance would be close to zero, simply because:

Unfortunately, we can’t get access to so large number of the datsets. But in Bagging algorithm, the method of sampling with replacement guarantees that each sampled dataset is a dataset consisting of i.i.d samples, which helps reduce the variance of the base learners. To note here, if we sample $m<n$ data samples from the original dataset $D$, then there is no theoretical proof that the variance is reduced compared with the learner trained on the whole dataset: since the bias-variance decomposition is conditioned on the dataset size.

Random Forest

Bagging的理论证明告诉我们它能够显著优化base learner的variance而对bias没有优化的效果. 那么什么样的base learner能够从bagging上benefit最大?答案是具有high variance and low bias的base learner: decision tree.

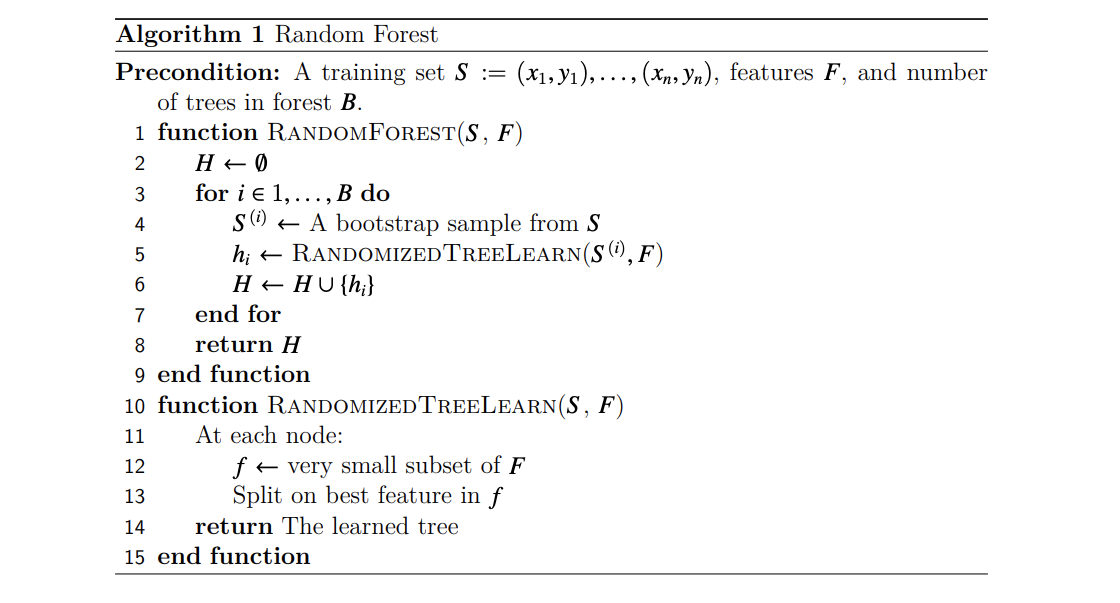

最常见的关于RF的错误认识是认为RF单一地采取了random feature selection. 但事实上RF作为bagging方法,构建i.i.d的sub-dataset依旧是不可缺少的. 所以RF是同时具备random samples和random feature selection这两个随机性的。在构建每一个单独的decision tree时,参与影响每一次decision boundary决策的是随机选择的$K$个feature. 而通过实验人们发现 $K=\sqrt {d}$ 往往是最优的选择.

关于Random Forest最美妙的性质在于:

- 首先RF几乎不需要任何超参,唯一的超参$K$也在经验上有非常好的设置 $K=\sqrt {d}$.

- 其次RF不需要进行train-val的数据集划分:因为在进行dataset sample时sub-dataset一定会出现重复的样本,那么原数据集中没有被采样到的样本就可以用于这个base learner的validation,这叫做Out Of Bag (OOB) validation.

- 最后是RF的训练是增量式的,不存在sub-dataset number这样的超参,我们只需要在error曲线平滑时停止训练即可.